Crawling is the process which is used by Search engines to update the new content which is availabe in internet or web upto-date.

It will check all the websites available in internet periodically.If it is find anything new or any new websites or web pages then it will get automatically updated in the Search Engine database.

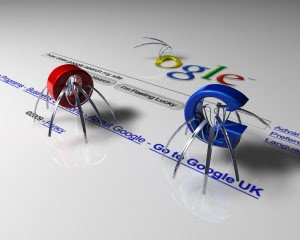

Search Engines crawls the web by using spiders or bots.Spiders are the program which is created by Search Engines to crawl the web periodically to update it content and all the links in its database.

Indexing

Indexing comes after crawl process of Search Engines.It is the process done by Search Engines to keep an index of all the upto-date website links and content in its database.

It stores all the relevant keywords that present in the web page content.Then it shows result according to those keywords when user search for any content.

Labels:

Search Engines

Labels:

Search Engines

Previous Article

Previous Article